Apache Spark ek open-source data processing framework hai jo high-speed, distributed data processing ke liye use hota hai. Yeh Hadoop ke ecosystem ka part hai, lekin Hadoop MapReduce se jyada fast hai. Spark ki help se hum big data ko analyze aur process kar sakte hain in a much efficient way. Yeh real-time data processing ke liye bhi bahot effective hai, jo use-cases like streaming, machine learning, and interactive analysis mein kaam aata hai.

Speed: Spark ki speed uski sabse badi speciality hai. Yeh in-memory computing ka use karta hai jo data ko disk pe read/write karne ke jagah directly RAM se process karta hai. Is wajah se Spark MapReduce se 100x tak faster hota hai.

Ease of Use: Spark ko aap Python, Java, Scala, aur R ke saath use kar sakte hain. Python ke saath Spark ko use karne ke liye PySpark ka option available hai. Matlab, agar aapko kisi ek programming language mein skill hai, to aap easily Spark ko use kar sakte hain.

Advanced Analytics: Spark ke saath aap not only data ko process kar sakte hain, lekin aap complex analytics bhi kar sakte hain, jaise machine learning, graph processing, etc. Spark MLlib (Machine Learning Library) aapko directly integrate karke ML models banane ki facility deta hai.

Real-time Data Processing: Apache Spark ki ek aur badi advantage hai uska real-time data processing support. Iska Spark Streaming module aapko real-time data streams ko process karne ka chance deta hai, jo applications like fraud detection ya social media analytics mein kaam aata hai.

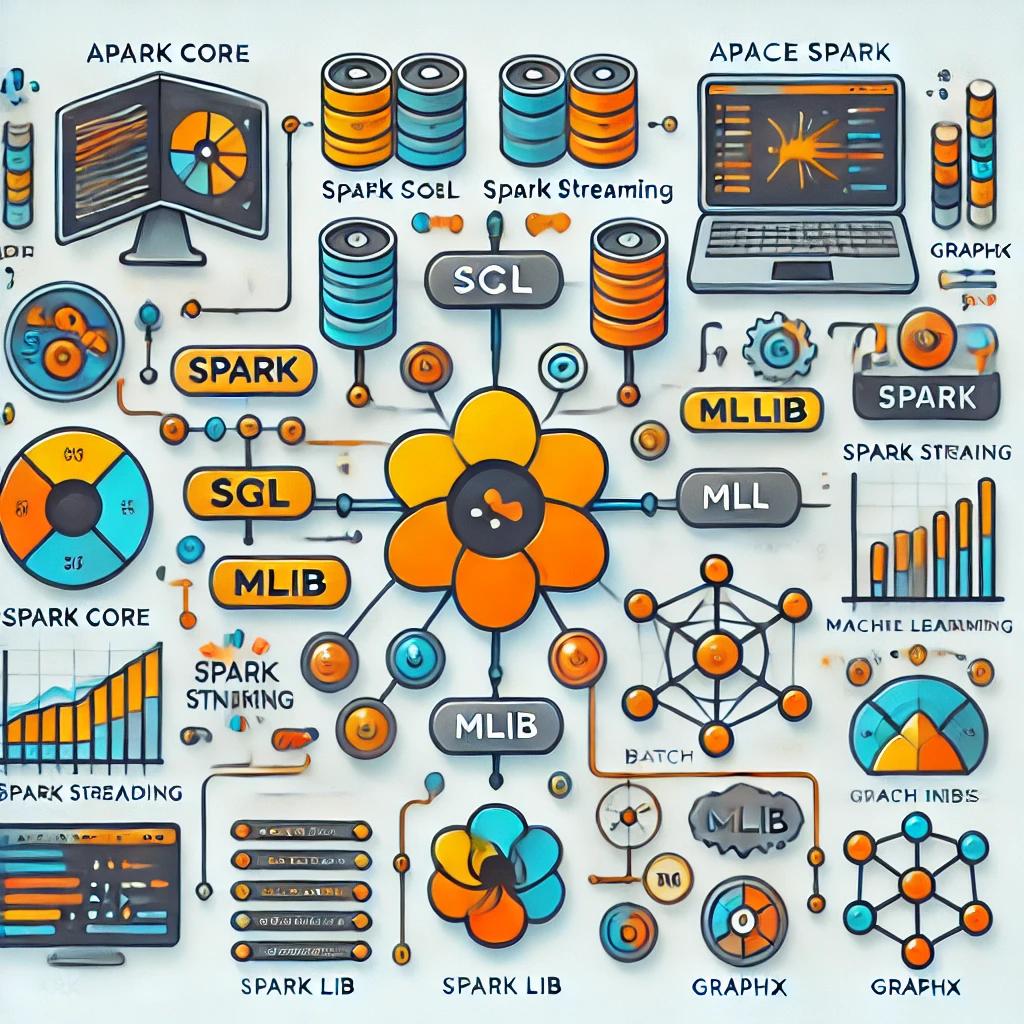

Spark Core: Spark ke sare modules Spark Core pe based hain. Yeh distributed task dispatching, scheduling, aur I/O functionality ko handle karta hai.

Spark SQL: Yeh component structured data ko process karne ke liye use hota hai. Isme aap SQL queries run kar sakte hain. Spark SQL kaafi useful hai agar aapko relational data sources, like Hive tables ya SQL databases ke saath work karna ho.

Spark Streaming: Yeh real-time data streams ko process karne ke liye bana hai. Isme aap continuous data streams ko micro-batches me process kar sakte hain.

MLlib (Machine Learning Library): Agar aap machine learning models banana chahte hain, to MLlib Spark ke saath kaafi useful hai. Yeh common algorithms jaise classification, regression, clustering, etc. provide karta hai.

Apache Spark Kaise Kaam Karta Hai?

Apache Spark distributed computing architecture pe based hai. Iska matlab yeh hai ki yeh data ko multiple machines pe parallel process karta hai. Spark ka fundamental data structure RDD (Resilient Distributed Dataset) hai. RDD ek immutable distributed collection hai jo fault-tolerant hai. Iska matlab agar kisi node pe failure hota hai, to data ko recover kiya ja sakta hai.

Spark Application: Ek Spark Application multiple jobs ka set hota hai jo Spark cluster pe run hota hai.

Driver Program: Driver program user ke code ko execute karta hai aur SparkContext ke through cluster resources ko manage karta hai.

Executor: Executor cluster ke har node pe run hota hai aur actual tasks ko execute karta hai. Driver program unko instructions deta hai ki kya process karna hai.

Tasks: Spark jobs ko further tasks me divide karta hai jo parallelly execute hote hain.

Data Analysis: Agar aapko large datasets ko analyze karna hai, to Spark SQL use karke aap SQL-like queries likh sakte hain. Jaise ki, agar aapke paas customer data hai, to aap usko analyze karke insights nikal sakte hain.

Machine Learning: PySpark aur MLlib ke saath aap predictive models bana sakte hain. Jaise, agar aapko customer cycle predict karna hai, to aap MLlib me logistic regression ka model use kar sakte hain.

Real-time Analytics: Spark Streaming use karke aap Twitter data ko analyze kar sakte hain ya IoT devices se data gather karke real-time insights le sakte hain. Iska use fraud detection, stock market analysis, etc. me hota hai.

ETL (Extract, Transform, Load): Spark ko data warehousing ke liye bhi use kiya ja sakta hai. Isme aap data ko extract karke, transform karke aur phir usko kisi data store me load kar sakte hain. Yeh ETL processes ko fast aur efficient banata hai.

E-commerce Recommendation Systems: Amazon, Flipkart jaise companies Spark ko use karke recommendation engines banati hain jo customers ko personalized product recommendations dete hain.

Social Media Analysis: Social media platforms jaise Twitter, Facebook Spark ko use karke real-time analysis karte hain. Yeh trends ko track karne, user behavior analyze karne, aur advertisements ko target karne me help karta hai.

Financial Risk Analysis: Banks aur financial institutions Spark ko fraud detection, risk management, aur customer sentiment analysis ke liye use karte hain.

Healthcare Data Analysis: Spark ko patient records analyze karne, disease prediction models banane, aur genetic data analyze karne ke liye bhi use kiya ja sakta hai.

Apache Spark ek bahut hi powerful tool hai jo big data processing ke world me game-changer hai. Iski speed, scalability, aur versatility ne isko industry ka favorite bana diya hai. Agar aapko large-scale data ko efficiently process karna hai, to Apache Spark ek ideal choice hai.

Koi bhi data-driven organization agar fast aur real-time insights lena chahti hai, to unke liye Spark ek must-have tool hai. Aap agar big data ke field me apna career banana chahte hain, to Apache Spark seekhna aapke liye kaafi beneficial ho sakta hai!