In today's world, we are surrounded by technology all around us, with new ones getting developed and built every single day. Technology had made our lives better in ways we couldn't have imagined before. Perhaps one of the influential technology around is bots.

What are Bots?

A 'bot' short for robot - is a software program that performs automated, repetitive, pre-defined tasks. Bots are special software designed and developed to do a specific repetitive task with zero human assistance automatically. Bots were developed to take the load off people by performing some tasks fatser and often more effectively than if a human performed them.

How do bots work?

Typically, bots operate over a network, bots that can communicate with one another will use internet-based services to do so - such as instant messaging, interfaces like Twitterbots or through Internet Relay Chat (IRC). Bots are made from sets of algorithms which helps them to carry out their tasks. The different types of bots are designed differently to accomplish a wide variety of tasks.

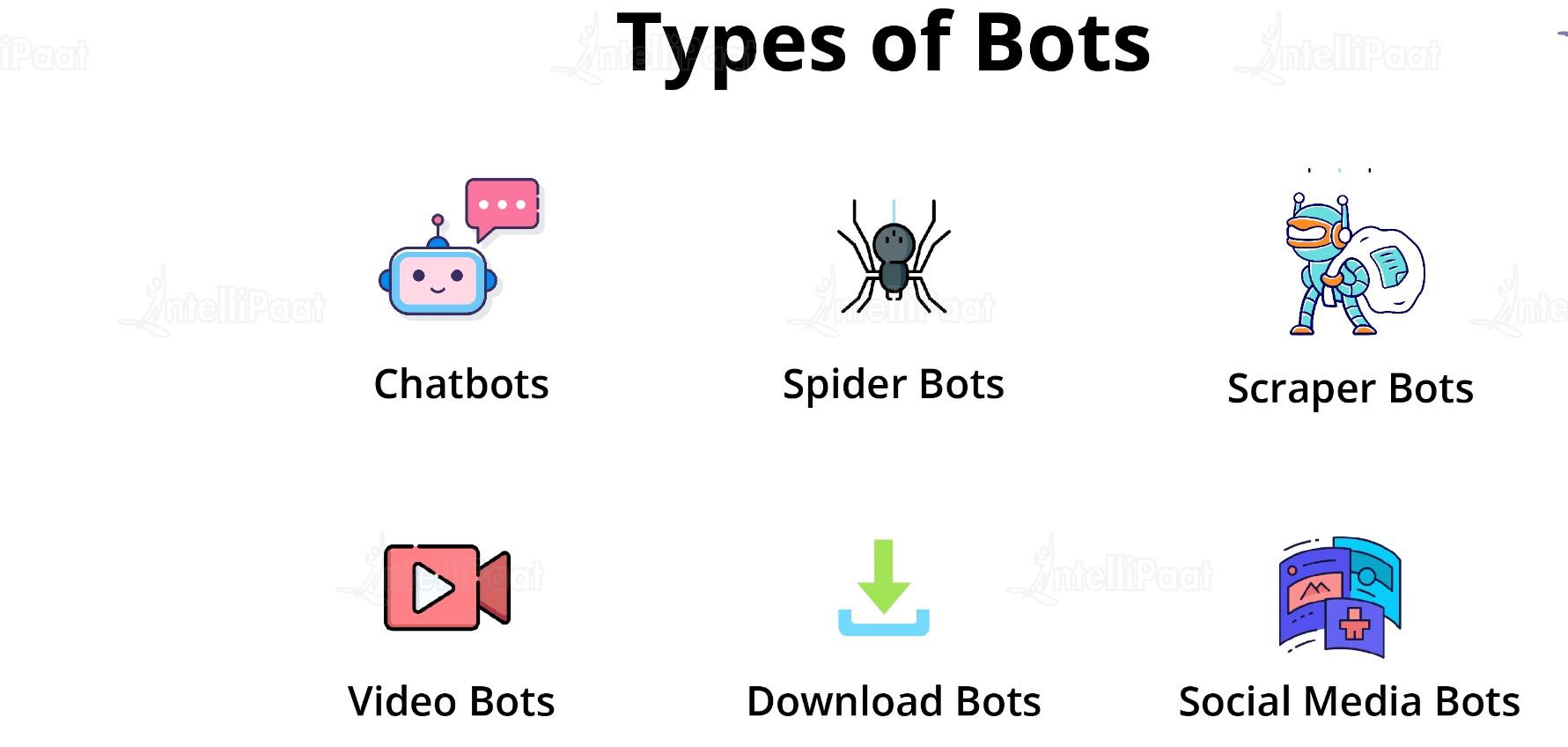

Types of Bots

- Chatbots: bots that simulat human conversation by responding to certain phrases with programmed responses.

- Social bots: bots which operate on social media platforms and are used to automatically generate messages, advocate ideas, act as a follower of users, and as fake accounts to gain followers themselves. It is difficult to identify social bots because they can exhibit similar behaviours to real users.

- Shop bots: bots that shop around online to find the best price for product a user is looking for. Some bots can observe a user's patterns in navigating a website and then customize thatsite for the user.

- Spider bots or Web Crawlers: bots that scan contnet on webpages all over the internet to help Google and other search engines understand how best to answer users search queries. Spders download HTML and other resources, such as CSS, JavaScript and images and use them to process site content.

- Web Scraping Crawlers: bots that read data from websites with the objective of saving them offline and enabling their reuse. This may take the form of scraping the entire content of webpages or scraping web content to obtain specific data points, such as names and prices of products on e-commerce websites.

- Knowbots: bots that collect knowledge for users by automatically visiting websites to retrieve information which fulfils certain criteria.

- Monitoring bots: bots used to monitor the health of a website or system. Downdetector.com is an example of an independent site that provides real-time status information, including outages of websites and other kind of services.

- Transactional bots: bots used to complete transactions on behalf of humans. For example, this allow customer to make a transaction within the context of a conversation.

- Downlaod bots: bots that are used to automatically download software or mobile apps. They can be used to manipulate download statistics - for example to gain more downloads on popular app stores and help new apps appear at the top of the chats.

Advantages of bots

- Faster than humans at repetitive tasks.

- They save time for customers and clients.

- They reduce labor costs for organization.

- They are avaliable 24/7.

- They are customizable.

- They are multipurpose.

- They can offer an improves user experience.

Disadvantages of bots

- Bots cannot be set to perform some exact tasks, and they risk misunderstanding users and causing frustration in the process.

- Humans are still necessary to manage the bots as well as to step in if one misinterprets another human.

- Bots can be programmed to be malicious.

Bot Mitigation Techniques

As bots evolved, so did mitigation techniques. There are currently three technical approaches to detecting and mitigating bad bots:

- Static approach: static analysis tools can identify web requests and header information correlated with bad bots, passively determining the bot's identify and blocking it if necessary.

- Challenge-based approach: you can equip your website with the ability to proactively check if traffic originates from human users or bots. Challenge - based bot detectors can check each visitors ability to use cookies, run JavaScript and interact with CAPTCHA elements. A reduced ability to process these types of elements is a sign of bot traffic.

- Behavioral approach: a behavioral bot mitigation mechanism looks at the behavioural signature ofeach visitor to see if it is what it claims to be. Behavioral bot mitigation establishes a baseline of normal behavior for user agents like Google Chrome, and sees if the current user derivates from that behavior. It can also compare behavioral signatures to previous, known signatures of bad bots.

By combining these approaches, you can overcome evasive bots of all types and successfully separate them from human traffic. You can use these approaches independently or you can rely on bot mitigation services to perform techniques for you.