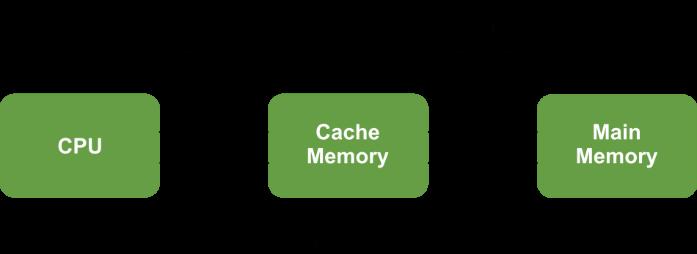

Cache memory is a small, high-speed memory located close to the CPU, designed to temporarily store frequently accessed data and instructions. Its primary purpose is to reduce the time the CPU takes to access data from the main memory , thus speeding up overall system performance.

Cache memory is used to enhance the performance and efficiency of computer systems. Here are some specific uses and benefits:

1. Speeding Up Data Access: By storing frequently accessed data and instructions, cache memory allows the CPU to access this information much faster than retrieving it from the main memory, thereby reducing latency.

2. Improving CPU Performance: Cache memory keeps the CPU supplied with the data it needs without waiting for slower main memory, which keeps the CPU busy and reduces idle time.

3. Enhancing System Responsiveness: Faster data access leads to quicker execution of programs and smoother system performance, providing a better user experience.

4. Reducing Memory Bottlenecks: By handling frequent data requests, cache memory alleviates the load on the main memory, reducing bottlenecks and improving overall system throughput.

Cache memory is a type of high-speed volatile computer memory that provides high-speed data access to the processor and improves the efficiency and speed of computer operations. Here are some fundamental principles of cache memory:

1. Temporal Locality: Frequently accessed data or instructions are likely to be accessed again in the near future. The cache holds these items to speed up future accesses.

2. Spatial Locality: Data elements with addresses close to recently accessed data are likely to be accessed soon. Cache lines, which fetch blocks of contiguous memory addresses, exploit this principle.

3. Cache Hierarchies: Modern computers use multiple levels of cache (L1, L2, L3) with different sizes and speeds. L1 is the smallest and fastest, while L3 is larger and slower.

4. Mapping: How data from main memory is placed into cache. Common methods include direct-mapped, fully associative, and set-associative caching.

.