Connection between human and the virtual world[/caption]

As for Facebook’s linguistic AI, it turns out that the bot may have been on to something. The sentences “I can i i everything else” and “balls have zero to me to me to me” sound like nonsense to us, but they demonstrate how two of the AI bots negotiated with each other. The repeated words and letters apparently indicated a back-and-forth over the amounts that each bot should take in their negotiations. Essentially, it was shorthand.

Bob: i can i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i i can i i i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i . . . . . . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else . . . . . . . . . . . . . .

Alice: balls have 0 to me to me to me to me to me to me to me to me to

Bob: you i i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

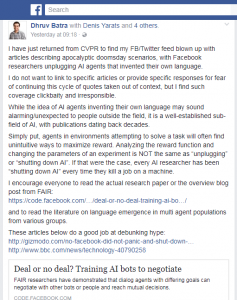

In recent weeks, a story about experimental Facebook machine learning research has been circulating with increasingly panicky, Skynet-esque headlines.

Connection between human and the virtual world[/caption]

As for Facebook’s linguistic AI, it turns out that the bot may have been on to something. The sentences “I can i i everything else” and “balls have zero to me to me to me” sound like nonsense to us, but they demonstrate how two of the AI bots negotiated with each other. The repeated words and letters apparently indicated a back-and-forth over the amounts that each bot should take in their negotiations. Essentially, it was shorthand.

Bob: i can i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i i can i i i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i . . . . . . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else . . . . . . . . . . . . . .

Alice: balls have 0 to me to me to me to me to me to me to me to me to

Bob: you i i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

In recent weeks, a story about experimental Facebook machine learning research has been circulating with increasingly panicky, Skynet-esque headlines.

“Facebook engineers panic, pull plug on AI after bots develop their own language,” one site wrote. “Facebook shuts down down AI after it invents its own creepy language,” another added. “Did we humans just create Frankenstein?” asked yet another. One British tabloid quoted a robotics professor saying the incident showed “the dangers of deferring to artificial intelligence” and “could be lethal” if similar tech was injected into military robots.

But let me tell you that the bots were never doing anything more nefarious than discussing with each other how to split an array of given items (represented in the user interface as innocuous objects like books, hats, and balls) into a mutually agreeable split.

“Facebook engineers panic, pull plug on AI after bots develop their own language,” one site wrote. “Facebook shuts down down AI after it invents its own creepy language,” another added. “Did we humans just create Frankenstein?” asked yet another. One British tabloid quoted a robotics professor saying the incident showed “the dangers of deferring to artificial intelligence” and “could be lethal” if similar tech was injected into military robots.

But let me tell you that the bots were never doing anything more nefarious than discussing with each other how to split an array of given items (represented in the user interface as innocuous objects like books, hats, and balls) into a mutually agreeable split.

Google reported that its translation software had done this during development. "The network must be encoding something about the semantics of the sentence" Google said in a blog.

Google reported that its translation software had done this during development. "The network must be encoding something about the semantics of the sentence" Google said in a blog.

Facebook said when the chatbots conversed with humans most people did not realise they were speaking to an AI rather than a real person.In June, researchers from the Facebook AI Research Lab (FAIR) found that while they were busy trying to improve chatbots, the "dialogue agents" were creating their own language.