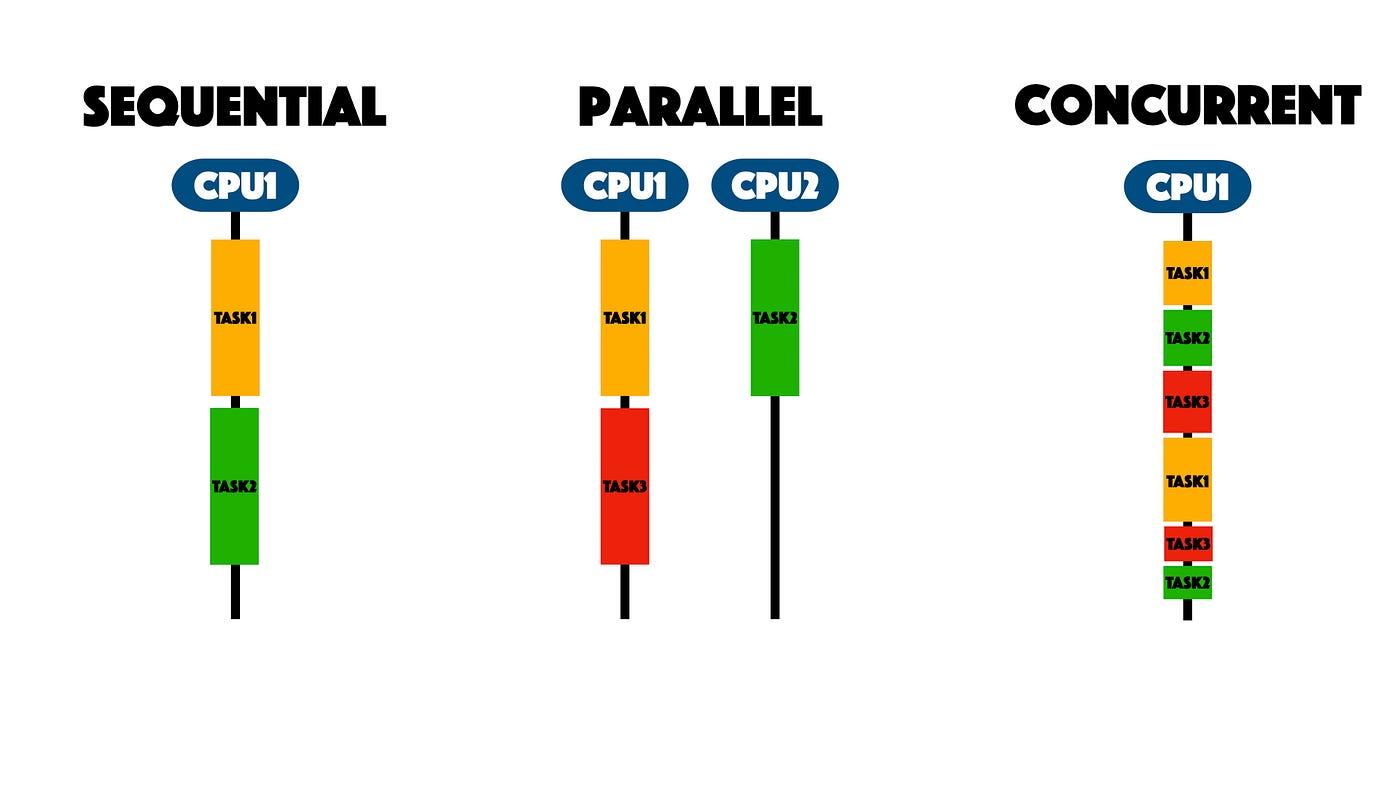

Concurrency is the concept of executing multiple tasks or processes simultaneously within a computing environment. It's a fundamental concept in computer science, especially in the context of multitasking operating systems, parallel computing, and distributed systems.

Concurrency allows systems to be more efficient, responsive, and capable of handling multiple tasks at once. It is crucial for applications that require real-time processing, responsiveness, and scalability

Multithreading:

Concurrency is often implemented using threads. Multiple threads can run concurrently within the same application, sharing the same memory space but executing different parts of the code. This is common in modern applications to handle tasks like user interface management and background processing simultaneously.

Synchronization:

Since concurrent tasks may access shared resources, mechanisms like locks, semaphores, and monitors are used to synchronize access and prevent conflicts, ensuring data integrity.

Challenges of Concurrency

Deadlocks: Occur when two or more threads are blocked forever, each waiting on the other to release a resource. For instance, Thread A holds Lock 1 and waits for Lock 2, while Thread B holds Lock 2 and waits for Lock 1.

Race Conditions: Happen when the outcome of a program depends on the sequence or timing of uncontrollable events like thread scheduling. If multiple threads try to modify the same resource simultaneously without proper synchronization, it can lead to unexpected behavior.

Starvation: A situation where a thread is perpetually denied access to a resource because other threads are continuously given priority.

Real-World Examples:

Web Servers: Handling multiple client requests concurrently.

Operating Systems: Running multiple applications at the same time.

Databases: Processing multiple transactions simultaneously while maintaining data consistency.