Deadlocks:

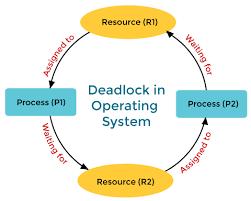

Definition: A deadlock occurs when a group of processes are stuck and they're waiting for a resource that another process in the group holds. No one can make progress because each process needs something that’s tied up by another. This kind of situation can completely freeze parts of a system, making it impossible for the affected processes to continue.

Conditions for Deadlock: For a deadlock to occur, there is a number of conditions that must be simultaneously true:

Mutual Exclusion: At least one resource must be held in a non-shareable mode, that is, only one process can use the resource at any given time.

Hold and Wait: A process holding at least one resource is waiting to get some additional resources that are currently being held by other processes.

No Pre-emption: Resources that are already allocated to a process cannot be forcibly taken away, they must be released by their own self by the process holding them.

Circular Wait: If a set of processes are waiting for each other in a circular chain, with each process holding a resource the next process needs.

Deadlock Prevention and Avoidance: Several strategies can be employed to prevent or avoid deadlocks:

Deadlock Prevention: This approach focuses on ensuring that at least one of the four conditions for deadlock cannot hold. For instance, the system might disallow circular wait by imposing a strict order in which resources can be requested.

Deadlock Avoidance: In this strategy, the system dynamically examines the resource-allocation state to ensure that a circular wait condition can never arise. The Banker's algorithm is a well-known method for deadlock avoidance, where the system only grants resource requests if it can ensure that all processes can complete eventually.

Deadlock Detection and Recovery: If deadlocks cannot be avoided or prevented, detection and recovery mechanisms are necessary. The system can periodically check for deadlocks and, upon finding one, take corrective actions such as terminating one or more processes to break the cycle or forcibly pre-empting resources.

Starvation:

Definition: Starvation, also known as indefinite blocking, occurs when a process waits indefinitely for a resource because other processes are continuously given preference. Unlike a deadlock, where processes are stuck forever, in starvation, the system is still running, but certain processes are perpetually deprived of necessary resources.

Causes of Starvation: Starvation typically arises in scenarios where processes are prioritized, and lower-priority processes are repeatedly bypassed by higher-priority ones. It can also occur if resources are allocated using certain scheduling algorithms that do not ensure fairness, such as those that always prefer shorter jobs over longer ones.

Preventing Starvation: To prevent starvation, operating systems can implement various fairness mechanisms, such as:

Aging: Gradually increasing the priority of waiting processes over time ensures that they eventually get a chance to execute.

Fair Queuing: Distributing resources based on a fair-share approach, where each process gets an equitable amount of CPU time or other resources, can help prevent starvation.

Round-Robin Scheduling: This scheduling technique allocates a fixed time slice to each process in turn, ensuring that all processes get a chance to execute, thereby mitigating the risk of starvation.

Deadlocks and starvation are critical issues in operating systems that can significantly impact the performance and reliability of a system. While deadlocks lead to a complete standstill where processes are perpetually stuck waiting, starvation results in processes being indefinitely deprived of resources. Understanding these concepts and implementing strategies to prevent, avoid, or mitigate them is essential for designing efficient and reliable operating systems. By carefully managing resources and ensuring fairness in scheduling, system designers can minimize the risks of deadlocks and starvation, thereby enhancing the overall stability and performance of their systems.